In this article I'll show you how easy it is to scrape data from websites using Google sheets. It's very basic in nature and can not be compared with web crawler scripts designed in python or in other programming languages but it's easy and who knows it might fit your needs.

Before I start, it's important to understand the ethical issue with web scraping, although it's not a big of an issue with Google sheets and what I'm about to teach but it's important and you should know if you ever thought about moving to Python for more advance web scraping because a single error in the request variable might cause DDOS attack on that website.

The most important thing is not to scrape any proprietary or copyrighted information and any information that can be used to identify a person be it Email addresses, phone numbers, etc. is considered personal data and EU has very strict laws and violation of it might cause you trouble.

Now there are several ways you can scrape data from a website like by visiting a website and copying and pasting the relevant data from the website to your spreadsheet, this will do the same job but the data is going to be static, if there's any modification made to the website you have to do the whole process of copying and pasting again which can be tiresome if you're dealing with large amount of dynamic data.

The other approach would be using a function which will update our spreadsheet if there's any change made to the data on the website and where we don't have to copy paste anything. There are two functions for the very same purpose that I'm going to be discuss in this tutorial : IMPORTHTML() and IMPORTXML().

Disclaimer : This tutorial is only for educational purpose only.

IMPORTHTML() : Imports data from a 'table' or a 'list' within an HTML Page.

Syntax :

=IMPORTHTML(url, "query", index)

url - The URL of the page to examine, including protocol (e.g. http://).

- The value for url must either be enclosed in quotation marks or be a reference to a cell containing the appropriate text.

query - Either "list" or "table" depending on what type of structure contains the desired data.

index - The index, starting at 1, which identifies which table or list as defined in the HTML source should be returned.

- The indices for lists and tables are maintained separately, so there may be both a list and a table with index 1 if both types of elements exist on the HTML page.

Usage :

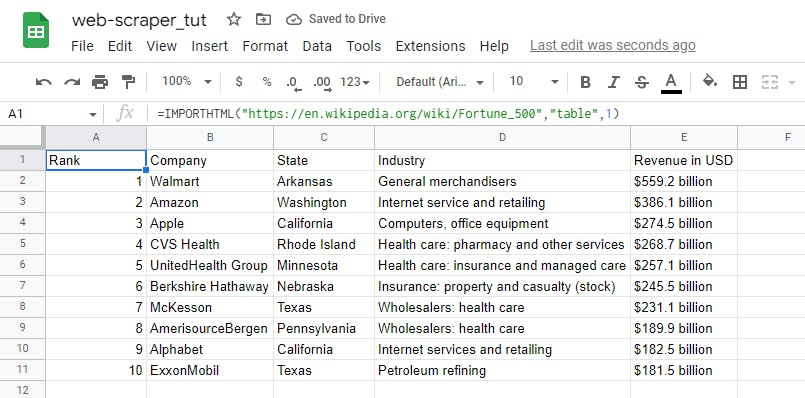

=IMPORTHTML("https://en.wikipedia.org/wiki/Fortune_500" , "table", 1)

=IMPORTHTML(A1,B1,C1)

Example :

Open a blank spreadsheet

Type the function or you can copy paste the above code snippet in your spreadsheet it'll do the same thing. In this example I'm using the list of Top ten Fortune 500 companies from Wikipedia :

- You will see that only the first table scraped from the website because we choose Index 1 :

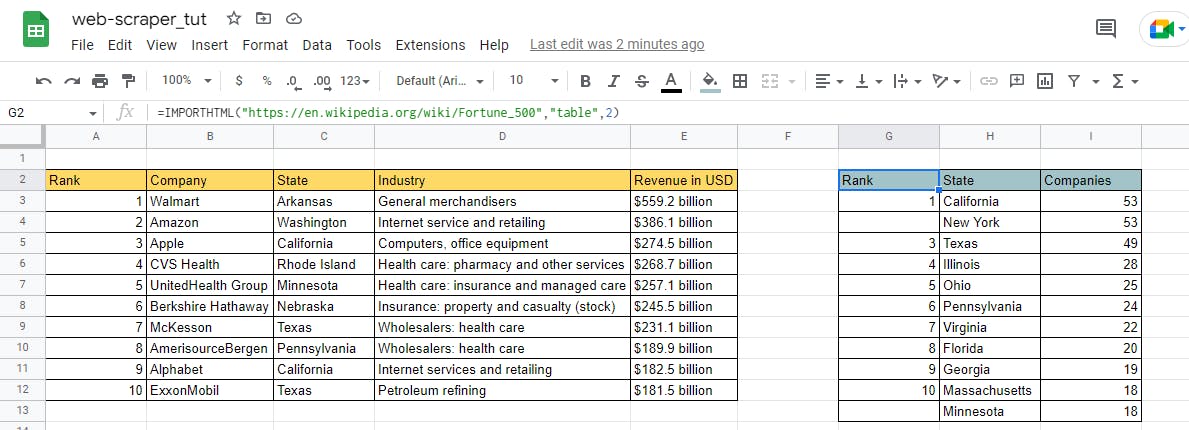

- Let's scrape another table below (for fun) :

Here you'll see that I've used the Index 2, because I wanted to scrape the second table to my spreadsheet. I also added the borders and a light shade of color for headers to make it look fancy.

IMPORTXML() : Imports data from any of various structured data types including XML, HTML, CSV, TSV, and RSS and ATOM XML feeds.

Syntax :

=IMPORTXML(url, xpath_query)

url - The URL of the page to examine, including protocol (e.g. http://).

- value for url must either be enclosed in quotation marks or be a reference to a cell containing the appropriate text.

xpath_query - The string that instructs the function on the kind of data we are attempting to import. XPath query is used to work with structured data.

Usage :

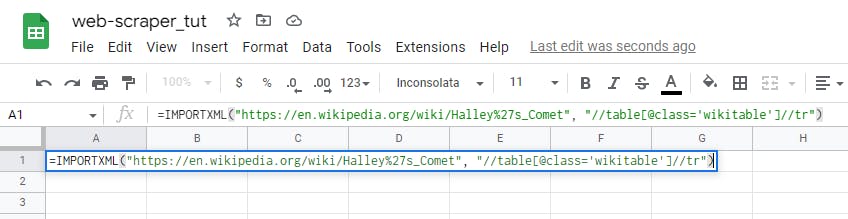

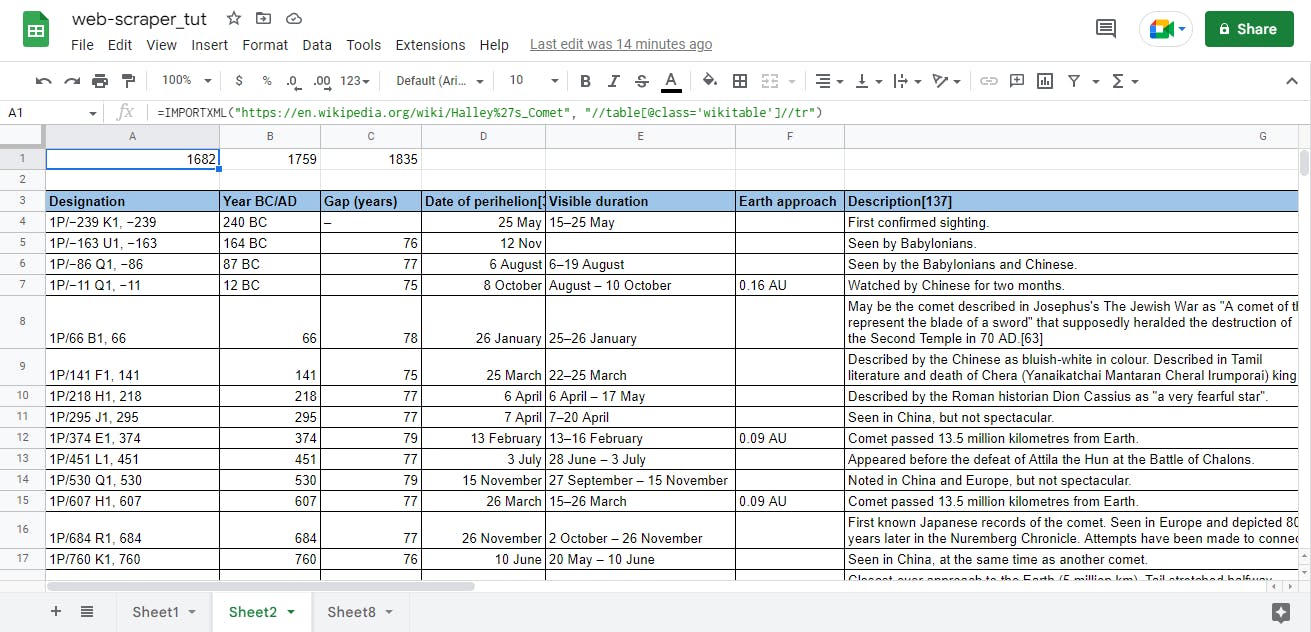

=IMPORTXML("https://en.wikipedia.org/wiki/Halley%27s_Comet", "//table[@class='wikitable']//tr")

=IMPORTXML(A2,B2)

Example :

Open a blank spreadsheet

Type the function and the URL or you can paste the above code snippet in your spreadsheet if you're trying for the first time. In this example I'm using the sighting of Halley's Comet data :

You might have questions about what's going on with the xpath attribute and what the heck is table and class? Well, these are HTML elements. All I did here was to look for table tag in the HTML webpage and it's class because a single Website could have multiple table tags and even have multiple classes, if you want to be more specific you can use id instead of class when available.

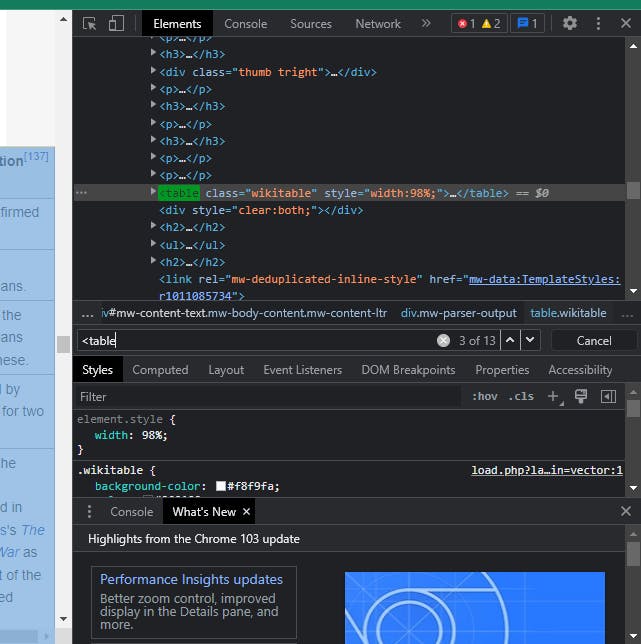

Here's how you can look for the specific tags or elements from where you want to scrape your data :

- Right click on the website from where you want to scrape data, Press 'Inspect' or 'Inspect Element'.

- A new window will pop in front of you with a lot of code, don't be scared because this is the fun part.

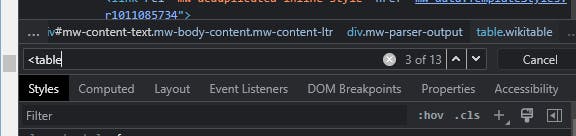

- Now if you know even just a little bit about HTML tags and elements you can easily find the things you are looking for. In the above example I want to scrape all the data in the table and I know that in HTML there's a

<table>tag for this purpose only. So I went straight for that by typing :

CTRL + F(This will open a search box)

In the search box, type the tag you want to search.

- When you hover your cursor over the Element, it'll highlight it's function in view pane on the left side (Chrome) so you can be sure that the tag you selected is correct.

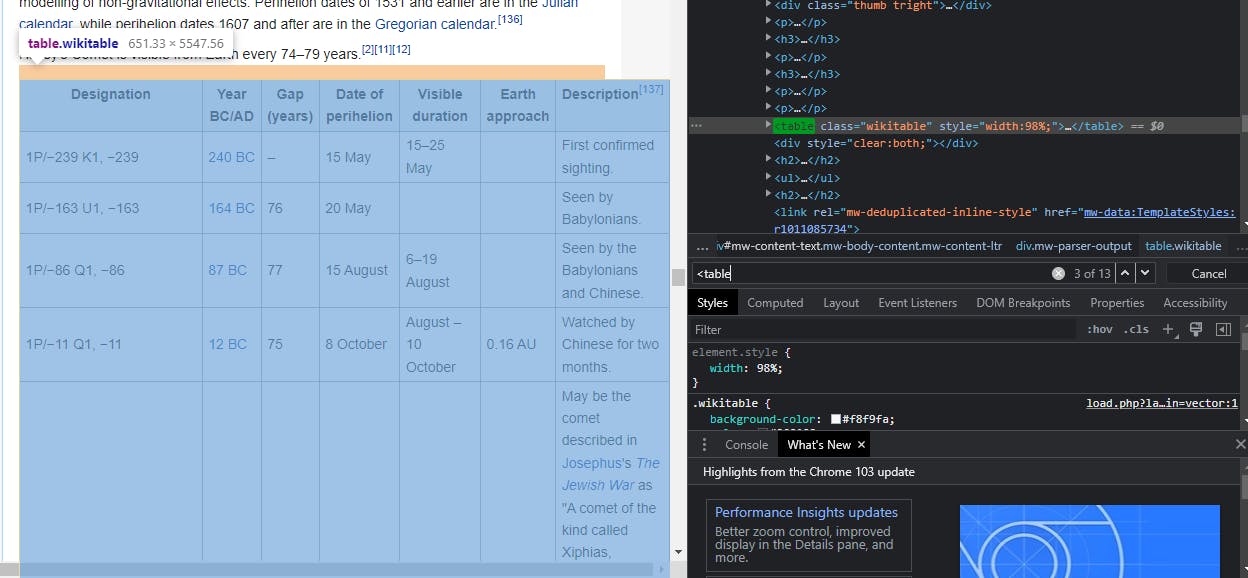

You can see the highlighted area in light blue shade.

If you want to learn more about xpath check out here , and for HTML tags and Elements you should check out here.

- The final result would be something like this (Again I've added borders and color for header) :

Here you go, this is all you need to scrape data from websites using Google Sheets. This might come really handy if you want to derive insights for your small project or if you want to learn about scraping in general but If you are someone who often have to scrape data, My suggestion would be to use programming language like Python. They are way more efficient. Just respect laws and others privacy.